Curious about running your fist R script inside SQL Server and want to learn how it looks like? Unfortunately, it’s not a mix of R and T-SQL code, because R commands will be executed in a separate engine, which is installed with SQL Server. Therefore, sp_execute_external_script procedure is introduced with a bunch of parameters.

To give an illustration of what I mean, let’s look at the required parameters @language and @script. First can now take the only value – R. In the future Microsoft is planning to support more languages, for example, they talked about Python and Julia at PASS Summit 2015. The second parameter is used to pass an R script to it. Let’s look at the first very simple example, which will return information about internal iris dataset, that is available in R by default. R language comes with some data integrated inside it in the form of datasets you can play with. It’s very convenient and makes it easier to start experiments.

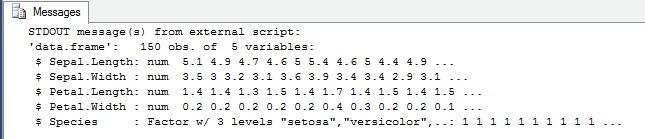

execute sp_execute_external_script @language = N'R', @script = N'str(iris);' with result sets none; go

The output information tells us, that iris dataset has 150 rows and 5 columns. It shows the name of the columns, its datatypes as well as some values.

All other parameters are optional, but probably you’ll use them actively, especially those, that are responsible for data exchange between SQL Server and R environments. For instance, you can send some data into R using and ordinary SELECT statement and pass it to @input_data_1 parameter. Inside R script, this data will be available in the form of a dataset with the default name of InputDataSet, if you haven’t changed it using @input_data_1_name parameter. Now, only one input dataset is supported. Another key thing to remember is that if you want to return some data back from R to the output of sp_execute_external_script procedure, you should put it inside R script into a dataset with the default name OutputDataSet, or any other in case you define its name in @output_data_1_name parameter. For instance, in the second example, I’m giving a simple SELECT statement as an input with the default name to R script and returning it back.

execute sp_execute_external_script @language = N'R', @script = N'OutputDataSet <- InputDataSet;', @input_data_1 = N'select 1;' with result sets undefined; go

If you don’t want to use the default names, or you already have a script with other names and don’t want to touch it, you can define the names of input and output datasets.

execute sp_execute_external_script

@language = N'R',

@script = N'SQLOut <- SQLIn;',

@input_data_1 = N'select 1;',

@input_data_1_name = N'SQLIn',

@output_data_1_name = N'SQLOut'

with result sets undefined;

go

The result of the execution will be the same.

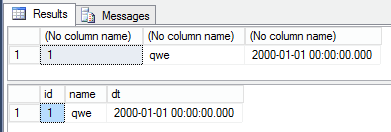

You should have already noticed, that the output has no column names. This is because I used with result sets undefined option. With this in mind, you can also define the returned dataset in order to better control the results.

execute sp_execute_external_script

@language = N'R',

@script = N'SQLOut <- SQLIn;',

@input_data_1 = N'select 1 as id, N''qwe'' as name, cast(''20000101'' as datetime) as dt;',

@input_data_1_name = N'SQLIn',

@output_data_1_name = N'SQLOut'

with result sets undefined;

go

execute sp_execute_external_script

@language = N'R',

@script = N'SQLOut <- SQLIn;',

@input_data_1 = N'select 1 as id, N''qwe'' as name, cast(''20000101'' as datetime) as dt;',

@input_data_1_name = N'SQLIn',

@output_data_1_name = N'SQLOut'

with result sets ((id int not null, name varchar(100) not null, dt datetime not null));

go

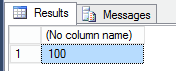

Furthermore, there is an option to send just a couple of variables using @params argument, where you define them, and later just pass the values there. Use output clause if you want to return some values.

declare @in_v int = 100, @out_v int;

execute sp_execute_external_script

@language = N'R',

@script = N'out_v1 <- in_v1;',

@params = N'@in_v1 int, @out_v1 int output',

@in_v1 = @in_v,

@out_v1 = @out_v output

with result sets none;

select @out_v;

go

That is all you need to start with executing basic R scripts inside SQL Server. In the next posts, I will show how to control parallel execution, work with large data volumes and some data type considerations you should know when working with R.